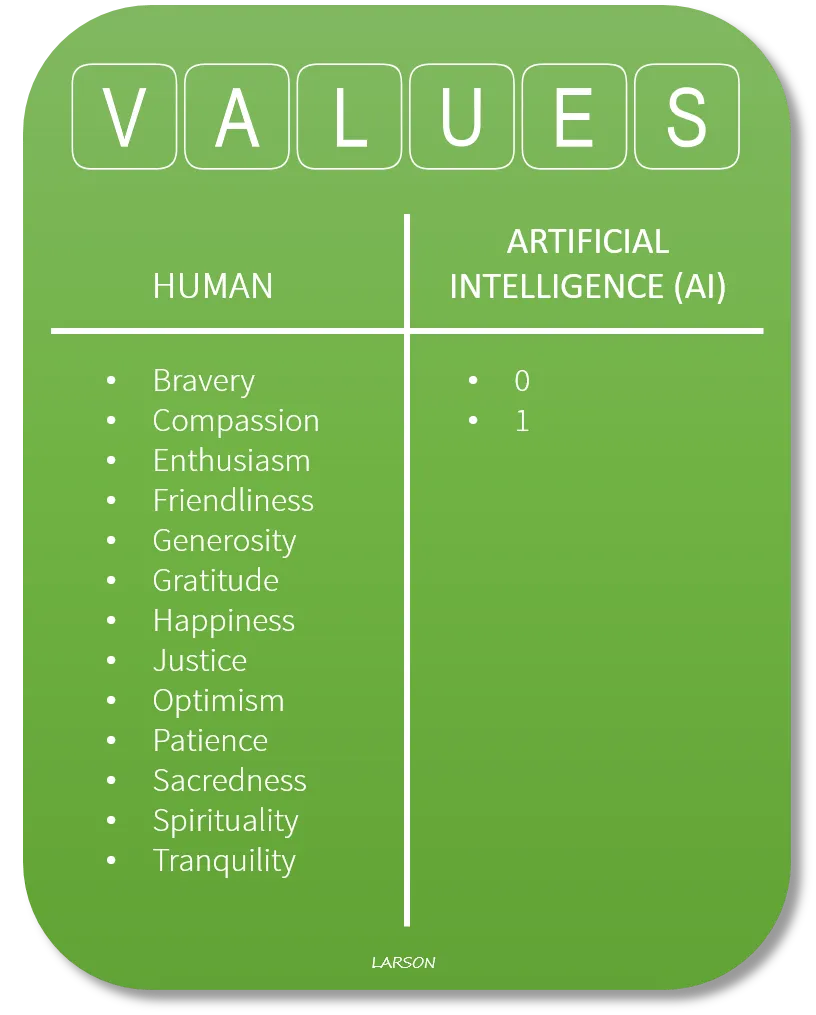

As artificial intelligence continues to permeate various aspects of our lives, understanding the values that underpin these systems has become increasingly critical. Recent research conducted by my colleagues and I at Purdue University has revealed a troubling imbalance in the human values embedded in AI training datasets. While these systems excel in areas of utility and information, they often fall short in promoting essential prosocial values like empathy, justice, and civic responsibility. This study not only highlights the ethical implications of such disparities but also sets the stage for a deeper examination of how we can enhance the alignment of AI systems with the diverse values of the communities they serve.

| Category | Details |

|---|---|

| Research Institution | Purdue University |

| Key Finding | Imbalance in human values in AI systems favoring information and utility over prosocial values. |

| Method Used | Reinforcement learning from human feedback, using curated datasets of human preferences. |

| Types of Values Examined | Well-being, justice, human rights, duty, wisdom, civility, empathy. |

| Most Common Values in AI Datasets | Wisdom and knowledge, information seeking. |

| Least Common Values in AI Datasets | Justice, human rights, animal rights. |

| Implications of Findings | AI systems may not address complex social issues effectively due to value imbalance. |

| Importance of the Research | Helps AI companies create balanced datasets reflecting community values for ethical AI. |

| Researcher | Ike Obi, Ph.D. student in Computer and Information Technology. |

Understanding Human Values in AI Systems

AI systems are powerful tools that can transform how we interact with technology. However, the values they reflect can sometimes be unbalanced. In our research at Purdue University, we discovered that many AI systems focus more on information and utility rather than important values like empathy, justice, and well-being. This imbalance can affect how AI interacts with people and handles sensitive topics, making it crucial to understand the human values they represent.

To explore these values, we created a list based on moral philosophy and social studies. We found that while AI is good at answering everyday questions, it often lacks the depth needed for more complex discussions about human rights and justice. This gap highlights the importance of ensuring AI systems are designed to support not just information seeking but also the broader values that matter to society.

The Role of Reinforcement Learning in AI Ethics

Reinforcement learning from human feedback is a method that helps AI systems learn from human preferences. By using curated datasets that reflect human values, researchers can guide AI behavior to be more helpful and honest. This approach is crucial for preventing harmful content from affecting how AI responds to users. It represents a significant step towards aligning AI systems with the values we hold dear as a society.

Our study shows that while many companies are trying to improve their AI systems, there is still a long way to go. By systematically analyzing the datasets used to train AI, we can identify where improvements are needed. This technique not only helps in understanding current values but also encourages AI developers to create systems that better reflect the needs and values of the communities they serve.

The Importance of Balanced Datasets for AI Development

Balanced datasets are essential for training AI systems that can serve all members of society effectively. Our research revealed that many datasets contain a strong emphasis on values like wisdom and knowledge, but fall short in areas such as empathy and social justice. This imbalance could lead to AI systems that do not fully understand or respond appropriately to critical social issues, which is a significant concern as AI becomes more prevalent in areas like healthcare and law.

By making the values in AI datasets visible, we can help companies improve their training data. This not only enhances the quality of AI responses but also ensures these systems can better address the complex issues we face as a society. As researchers and developers work together, the goal is to create AI that not only informs but also supports the well-being of all individuals, promoting a more equitable and just society.

The Importance of Value Diversity in AI Training Datasets

Diversity in values within AI training datasets is crucial for creating systems that reflect the complexities of human society. When datasets are skewed heavily toward certain values, such as utility or information, the AI systems developed from them may lack the ability to engage with nuanced issues like empathy and justice. This can lead to responses that are not only unhelpful but potentially harmful, as they overlook the diverse perspectives and needs of users.

Moreover, the implications of value imbalances extend beyond individual interactions. As AI systems are increasingly deployed in sensitive areas like healthcare, law, and public policy, their ability to navigate complex social issues becomes paramount. Ethical AI should embody a broad spectrum of human values to ensure that it serves all segments of society, fostering trust and promoting well-being in the communities it impacts.

Frequently Asked Questions

What are **human values** in AI systems?

**Human values** are important ideas like kindness, fairness, and helping others that we want to see in AI. Researchers study these values to make sure AI acts in ways that are good for people.

Why do AI systems need **reinforcement learning**?

**Reinforcement learning** helps AI learn from human feedback. It teaches AI to be more **helpful** and **honest** by guiding its answers based on what people prefer.

What did the study at Purdue University find about **AI datasets**?

The study found that AI datasets had lots of examples for questions like booking flights but lacked examples for important topics like **empathy** and **justice**. This shows an imbalance in values.

How can **AI companies** improve their systems?

AI companies can improve by using the study’s findings to make their datasets more balanced, including more examples of values like **human rights** and **tolerance**.

Why is it important for AI to reflect **community values**?

AI should reflect **community values** so it can make better decisions in areas like healthcare and law. This helps ensure AI serves everyone’s best interests.

What are some examples of values researchers focus on in AI?

Researchers focus on values like **well-being**, **justice**, **wisdom**, and **helpfulness**. These values guide AI in making ethical choices.

How does the **taxonomy of human values** help in AI development?

The **taxonomy of human values** is a way to categorize important ideas. It helps researchers understand and improve how AI systems are trained to reflect these values.

Summary

The content outlines research by Purdue University colleagues revealing a significant imbalance in human values within AI training datasets. Primarily, these datasets emphasize information and utility values over prosocial and civic values, leading to potential ethical issues as AI integrates into critical sectors. By examining three open-source datasets, researchers developed a taxonomy of human values, identifying a scarcity of examples addressing empathy, justice, and human rights. This study highlights the importance of aligning AI systems with a broader spectrum of values to ethically serve society, providing insights for AI companies to enhance their datasets and better reflect community values.

Leave a Reply