In an era where technology blurs the lines between reality and fabrication, new research from iProov sheds light on a pressing concern: the public’s inability to detect deepfakes. With highly realistic AI-generated videos and images designed to impersonate real individuals, the stakes have never been higher. This study, involving 2,000 consumers from the UK and US, reveals that a staggering 99.9% of participants failed to accurately identify deepfakes, underscoring a significant vulnerability to deception. As we delve deeper into the findings, we uncover alarming trends that highlight the urgent need for enhanced awareness and advanced security measures in our increasingly digital world.

| Key Findings | Statistics | Implications | |

|---|---|---|---|

| Deepfake detection is alarmingly low. Only 0.1% of participants could identify deepfakes correctly, indicating a serious risk of deception in daily life. | 0.1% | Increased vulnerability to fraud due to lack of awareness. | |

Understanding Deepfakes

Deepfakes are realistic-looking videos and images made by artificial intelligence. This technology can create convincing impersonations of real people, making it hard for viewers to tell what’s real and what’s fake. The new research from iProov shows that only a tiny group of people, just 0.1%, can recognize these deepfakes correctly. This means that most people struggle with identifying these deceptive images and videos in everyday life.

As deepfake technology improves, it becomes even trickier for people to detect fake content. Many individuals don’t even know what deepfakes are, which adds to the problem. The study revealed that a significant number of older adults have never heard of deepfakes, making them more vulnerable to being tricked. This lack of awareness shows why it’s crucial for everyone to learn about deepfakes and how they can impact our lives.

The Risks of Deepfakes

Deepfakes pose serious risks to personal and financial security. Criminals can use deepfake videos to pretend to be someone else, tricking people into giving away sensitive information. The iProov study highlights that some people believe they are good at spotting deepfakes, but this overconfidence can lead to dangerous situations. Unfortunately, many people do not know how to report a deepfake when they see one, which means these harmful videos can spread further.

Moreover, deepfakes can damage trust in social media platforms. People are beginning to see these platforms as sources of misinformation, which can affect how they interact online. The fear of being deceived by deepfakes also makes people less likely to believe what they see on their screens. With nearly three-quarters of people concerned about the impact of deepfakes, it’s clear that more awareness and better tools are needed to fight against these digital threats.

Combating Deepfake Threats

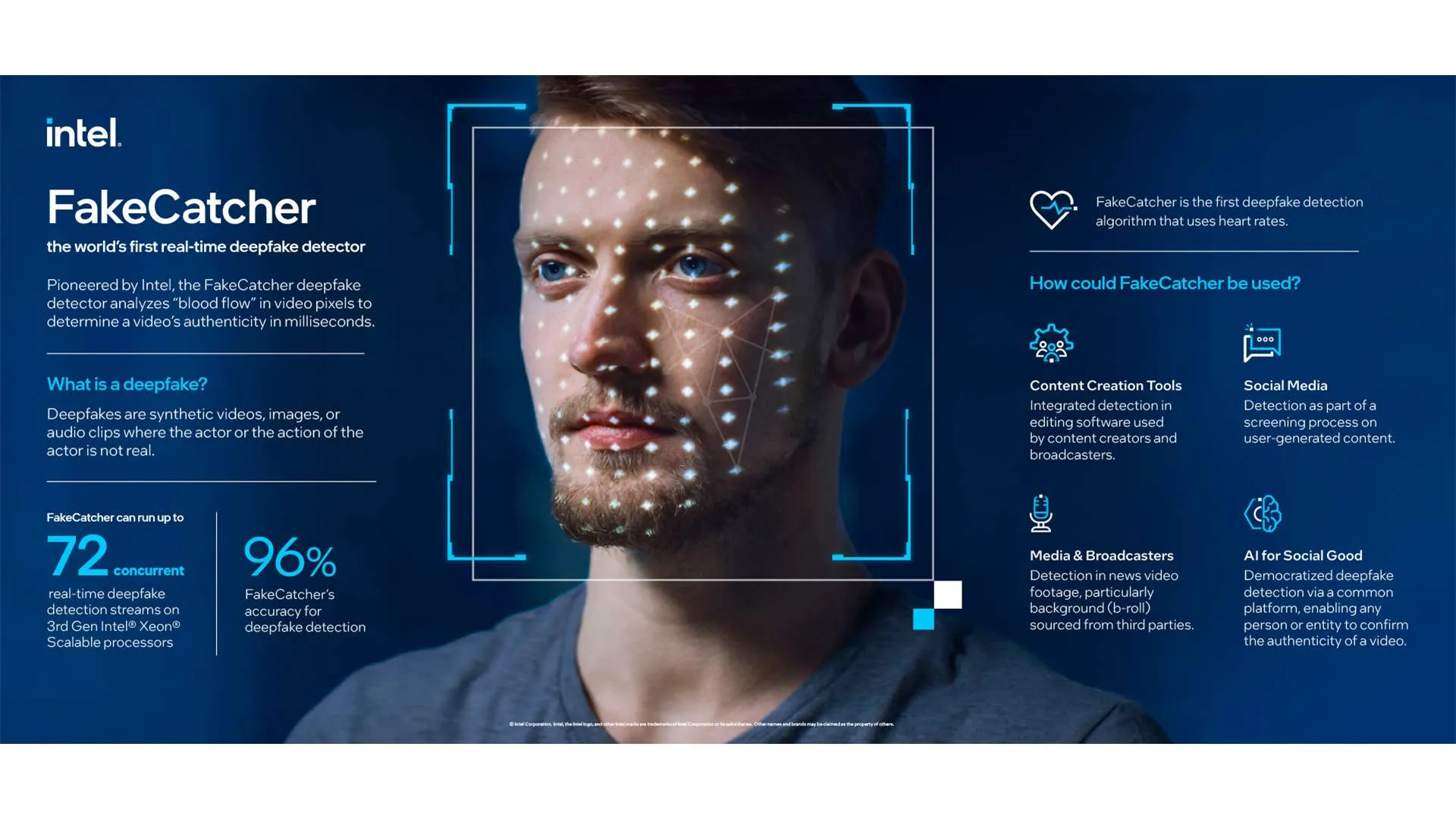

To protect ourselves from deepfakes, we need to use advanced technology. Experts like Professor Edgar Whitley suggest that organizations should not rely solely on human judgment to identify deepfakes. Instead, they must implement biometric security measures, such as facial recognition with liveness detection. This technology helps confirm that a person is who they say they are, making it harder for criminals to exploit deepfake technology.

Additionally, education is key in the battle against deepfakes. Individuals must learn to critically assess the information they see online. Only a small number of people actively search for reliable sources when they suspect a deepfake, which needs to change. By raising awareness about deepfakes and encouraging people to use secure verification methods, we can help reduce the risks associated with this growing problem.

Understanding the Technology Behind Deepfakes

Deepfakes are crafted using sophisticated artificial intelligence algorithms, primarily deep learning techniques that analyze vast amounts of visual data. This technology enables the creation of highly realistic videos and images that can convincingly mimic real individuals. By training on existing media, AI can generate new content that appears authentic, making it increasingly difficult for the human eye to detect the differences. As these technologies evolve, so does the complexity of the deepfakes produced.

The underlying technology of deepfakes relies on neural networks, particularly Generative Adversarial Networks (GANs), which function by pitting two AI systems against each other. One network generates fake content while the other evaluates its authenticity, continually improving the quality of the output. This process not only increases the realism of the fakes but also poses a significant challenge for detection tools, often leaving consumers vulnerable to deception and misinformation.

The Psychological Effects of Deepfake Exposure

Exposure to deepfakes can lead to psychological distress, particularly as society grapples with issues of trust and authenticity in media. When individuals realize they have been misled by deepfake content, it can result in feelings of betrayal and confusion, eroding confidence in visual information. This psychological impact extends beyond personal experiences; it can contribute to broader societal anxieties regarding misinformation and the integrity of news sources.

Additionally, the prevalence of deepfakes may foster a sense of skepticism towards legitimate content. As people become aware of the potential for manipulation, they may question the authenticity of even the most credible sources, leading to a pervasive distrust of visual media. This shift in perception can undermine critical thinking and hinder informed decision-making, complicating efforts to navigate an increasingly complex digital landscape.

The Role of Education in Combating Deepfakes

Educating the public about deepfakes is crucial in empowering individuals to discern real content from fake. Awareness campaigns highlighting the characteristics of deepfakes and the technology behind them can help demystify these AI-generated creations. By equipping people with knowledge about how deepfakes are made and the potential harms they pose, society can cultivate a more informed citizenry capable of approaching digital content critically.

Moreover, educational initiatives can target specific demographics, particularly older generations who may be less familiar with digital technologies. Workshops, online courses, and public service announcements can play a vital role in bridging the knowledge gap and fostering a culture of vigilance. As consumers become more adept at identifying deepfakes, the overall impact of misinformation can be mitigated, promoting a healthier information ecosystem.

Future Trends in Deepfake Technology and Security Solutions

As deepfake technology continues to evolve, so too must the strategies for detecting and combating these threats. Innovations in biometric verification, such as enhanced facial recognition systems and AI-driven detection tools, are emerging as essential components in the fight against deepfakes. Organizations are increasingly investing in advanced security solutions that utilize real-time data analysis to identify discrepancies in video and image content, enhancing their ability to thwart potential fraud.

Looking forward, collaboration between technology companies, policymakers, and cybersecurity experts will be vital in developing comprehensive frameworks to address the deepfake dilemma. This includes not only improving detection technologies but also establishing legal and ethical guidelines surrounding the creation and distribution of deepfake content. By fostering multi-stakeholder partnerships, society can work towards a future where the risks associated with deepfakes are effectively managed, and public trust in digital media is restored.

Frequently Asked Questions

What is a deepfake?

A **deepfake** is a type of fake video or image that uses **artificial intelligence** to make it look like someone is doing or saying something they didn’t actually do. It can be very realistic!

Why is it hard to spot deepfakes?

Many people struggle to find deepfakes because they look so real. In fact, a study showed that only **0.1%** of people could tell the difference between real and fake videos and images.

Who is most at risk of believing deepfakes?

Older people, especially those aged **55-64** and **65+**, are more at risk because many of them had never even heard of deepfakes before taking the study.

How do deepfake videos compare to deepfake images?

It’s harder to detect deepfake **videos** than **images**. People were **36%** less likely to correctly identify a fake video, which raises concerns, especially during video calls.

What can people do to avoid being fooled by deepfakes?

To avoid being tricked, people should check information from different sources and be careful about what they see online. Only **11%** of people currently check sources critically.

How do deepfakes affect trust in social media?

Deepfakes make people trust social media less. After learning about them, **49%** of users reported feeling less trust in platforms like **Meta** and **TikTok**.

What should organizations do to fight deepfakes?

Organizations need to use advanced **biometric technology**, like **facial recognition**, to verify if someone is real and who they say they are. This helps protect against deepfake fraud.

Summary

New research from iProov highlights significant challenges in detecting deepfakes, with only 0.1% of participants accurately identifying real from fake content in a study involving 2,000 US and UK consumers. The findings reveal a lack of awareness, especially among older generations, with nearly 39% of those 65+ unaware of deepfakes. Detection of deepfake videos is particularly difficult, raising fraud concerns. Despite low detection rates, over 60% of participants felt confident in their ability to identify deepfakes. The study emphasizes the urgent need for advanced biometric security measures to combat the growing threat of deepfakes in various sectors.

Leave a Reply